In the previous post, we argued that the ability to read and interpret data model diagrams is an important skill for user interface designers working on business information systems or other applications that involve a lot of structured data. In this post, we will take a very brief look at UML class diagrams, a popular way to visually depict a data model. (UML is the Unified Modelling Language, a standard for visual software modelling.)

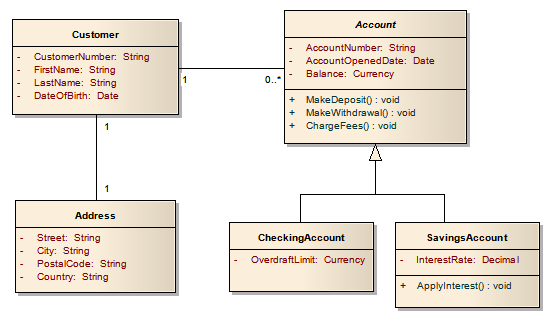

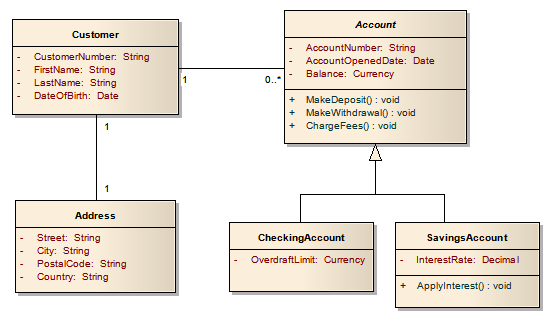

In a UML class diagram, we can represent entities (classes), attributes, operations, and various types of relationships between entities. Let’s understand these by examining the following simplistic UML class diagram showing part of the data model for a banking application:

The boxes represent the various entities that the product needs to know about. (When we are talking about things in the domain, we usually use the term entities, but when these entities are implemented in software, they are more frequently called classes. We’ll use the term entities here for clarity.)

An entity is an definition of what information is needed to represent a thing or a concept, or a set of similar things or concepts. Entities basically represent nouns.

In our example diagram above, the Customer class represents all of the pieces of information, or attributes, that our application needs to know about customers: their customer numbers, their first and last names, and their dates of birth. For each entity, there will usually be many concrete instances of that entity (also known as instantiations, records, or objects) known by the system, and each instance will have its own separate set of values for each attribute.

In other words, for our Customer entity, if the system knows about 500 different customers, then there will be 500 instances of the Customer entity in the system, and each of those 500 Customer instances will have its own set of values for the attributes. One Customer instance might be for a customer with a customer number of 1234567, first name John, last name Smith, and date of birth 05.05.1965. Another Customer instance might be for customer number 2345678, Alice Jones, born 07.07.1977.

In UML class diagrams, the box for an entity is divided into multiple sections:

- The top compartment contains the name of the entity, such as Customer.

- The middle compartment lists the attributes for the entity. Attributes are the fields that describe the properties of the entity. So if the entity is a noun, the attributes might be thought of as adjectives that describe the noun. For the Customer entity, the attributes are CustomerNumber, FirstName, LastName, and DateOfBirth. In domain models, usually just the attribute names are given; for data models suitable for implementation in software, the data type for each attribute is usually given as well. Here, “String” means text, “Date” means a calendar date, and so on.

- The bottom compartment of the box is optional and is frequently omitted. It lists any operations or actions that software implementations of instances of that entity can perform. These operations can be thought of as verbs that the entity, a noun, can do. Not all entities will have operations, and operations are typically listed only in data model and not in domain models. (The notation used for naming the operations usually reflects the programming language being used in the system, which is why the example diagram here includes the additional brackets and the “void” annotation.)

Relationships

Relationships between entity classes are represented with lines drawn between boxes. The UML standard uses various styles and combinations of lines and arrows to indicate different types of relationships. Let’s examine the two most common relationship types: associations and inheritance.

Association relationships

The most common type of relationship is the association, represented by a plain line linking two boxes. An association relationship means that two entity instances (objects) can be linked together. In our example diagram above, Customer instances can be linked with Address instances and Account instances.

The numbers alongside the relationship lines indicate what is called the cardinality of the relationship. In the sample diagram, the association between Customer and Address is what is called a 1-to-1 relationship, and this means that every Customer instance must be linked to exactly one Address instance. In other words, a Customer cannot have more than one address, nor can a Customer exist who doesn’t have an address on file; every Customer instance must always be linked to an Address instance. And likewise, an Address instance cannot exist in the system if it is not linked to a Customer, and an Address instance can only be linked with one specific Customer. This particular model diagram doesn’t allow two customers (a husband and wife, for instance) who happen to live at the same postal address to share one Address record in the system; each Customer must have its own Address, which means there would be data duplication (two Address records holding the same postal address data) — which may have usability consequences.

The association between Customer and Account, on the other hand, is an example of what is generally called a 1-to-many relationship. There is a cardinality indicator of 1 on the one side, and the other side, the 0..* notation is shorthand for “zero or many”.

This means that the number of Account instances that a Customer can be linked to can be between “zero” and “many”. So a customer in the system might have no accounts at all, or he or she might have one account, or he or she might have two or twenty or even more accounts.

But the 1 on the other end of the association means that an Account can only belong to one Customer; two Customers cannot share an Account (at least according to this data model).

Inheritance (specialization/generalization) relationships

Another type of relationship is the inheritance relationship, drawn with a plain line with an open triangular arrowhead at one end.

If you see an inheritance relationship where the arrowhead points from, say, entity Dachshund to entity Dog, this indicates that entity Dachshund inherits the properties of Dog. Dachshund has a copy of all of the properties of Dog, but may have additional properties of its own. We can say that that Dachshund is a subclass of Dog, or we may say that Dachshund is a specialization (or a specialized type) of Dog. And if Dog had multiple subclasses, like Dachshund, BlackLab, and Poodle, then we would say Dog represents the generalization of the commonalities amongst the subclasses.

In our banking example above, there is an entity called Account, and below it, there are two entities, CheckingAccount and SavingsAccount, which are specialized types (subclasses) of Account.

Instances of the CheckingAccount entity take on all of the attributes and operations of Account, and additionally have the attributes and operations specific to CheckingAccount. So a CheckingAccount instance has the attributes AccountNumber, AccountOpenedDate, Balance, and OverdraftLimit, and can perform the operations MakeDeposit, MakeWithdrawal, and ChargeFees. Likewise, a SavingsAccount instance has the attributes AccountNumber, AccountOpenedDate, Balance, and InterestRate, and can perform the operations MakeDeposit, MakeWithdrawal, ChargeFees, and ApplyInterest.

When a customer opens a checking account at the bank, a link is made between the Customer instance and a new CheckingAccount instance. And when a customer opens a savings account, a link is made between the Customer instance and a new SavingsAccount instance. Because a Customer can have between “zero” and “many” accounts, a Customer may be linked to multiple CheckingAccounts and SavingsAccounts, or none at all.

But a Customer will never actually be linked to an instance of Account. Why? Account is what we call an abstract supertype — no instances of that type can actually be created in the system. You can only create one of the subtypes. So if a customer wants to open a new account, he or she must choose between a CheckingAccount and a SavingsAccount, as no “general” Account can be created.

If you look closely, you will see in the diagram that the entity name “Account” is italicized. This italicization is the convention used in UML for marking an entity as being an abstract entity. Because this is so subtle visually, so you can additionally add the notation “<<abstract>>” inside the top compartment of the box if you want to bring more attention to the fact.

Interpreting data models for user interface design

So why are data models so important in many projects?

First of all, the data model tells you what attributes are present for each entity. So if you are designing a form or a screen for editing a customer’s address, the data model tells you what fields you have to work with.

Likewise, the relationships between the entities are important for user interface designers to know, because these associations reveal how all of the data is related, and your application’s interface cannot be designed in such a way that it violates the relationships in the model. So if the data model allows customers to have multiple accounts, then you need to design the product to allow the user a way to view and access all of the accounts. This means that the product will look and behave differently than it would if customers were only permitted to have a single account.

Changing a data model to better fit the real world

You might say that some of the rules we’ve just discussed above for customers and addresses and accounts are not very practical for a real bank — two spouses should be able to share a joint account, for instance. And that’s correct — this is a highly oversimplified data model.

If you’re working with a data model that has been designed by somebody else, it’s often inevitable that you come to some disagreement over how the entities and relationships have been designed. In our bank example, you might argue that customers should be able to have multiple addresses on file, such as a work address as well as a home address. Or you might want the system to keep track of all of the address changes in the past. Or you might want address records to be shared by multiple customers in the same household, so that a change-of-address need only be done once, and the new address becomes effective for all of the customers sharing the address record.

If the design of a data model is hindering your ability to design a usable interface, because the designer’s data model doesn’t quite match the way your users want or expect things to be, then you should propose changes to the data model and discuss them in with your project team. Developers and project managers can be resistant to changing the data model because some types of changes can involve a lot of work, expense, and uncertainty.

Keep in mind that changing the data model early on in a project is much easier than making changes once the software has been built and is being used by users — there can be some very tricky data conversion and migration issues to consider.

Usability concerns in moving from a data model to screen designs

There is often a temptation to map one-to-one from data models to screen designs in a mechanical fashion, and in fact some systems exist that can translate data models or database schemas into rudimentary user interfaces. The use of entities often lends itself to having separate screens or tabs or other sections that correspond to various entities, but beware that this is not always desirable from a usability perspective. Sometimes there may be fields that logically belong near each other on the screen, but the fields may be stored in attributes in different entities in the model. And mimicking the data model too closely can often force the user to have to create the right instances of the right entities in the right places in order to get tasks done, which is a very poor approach from a usability perspective; this would essentially force the user to know all the details of the data model without having access to a copy of the data model diagram!

Focus on the users’ needs first. Use the data model to inform yourself of what entities and attributes are available, but then design the screens in a way that makes logical sense. Think about the work and tasks that the users have to do, and design task flows that guide them through the work with a minimum of memorization. Focus on designing a user-friendly experience first, and then worry about defining the mappings between the on-screen elements and the data model.

Summary

While this brief introduction to UML class diagrams only scratches the surface, it will hopefully get you started if you’re a user interface designer who hasn’t encountered data models before and now needs to be able to read and interpret them in a project. If you want to learn more about UML, a web search will uncover plenty of useful resources.